MBR disinfection: playing with numbers?

Simon Judd has over 35 years’ post-doctorate experience in all aspects of water and wastewater treatment technology, both in academic and industrial R&D. He has (co-)authored six book titles and over 200 peer-reviewed publications in water and wastewater treatment.

Stephen Katz et al.'s recent article The disinfection capability of MBRs: credit where credit’s due raises a few rather intriguing questions concerning the measurement of the disinfection capability of an MBR and, for that matter, any other water and wastewater treatment technology.

The degree of disinfection is normally presented as the log removal value or LRV. This value is simply the log of the ratio of the feedwater to treated water concentration for the target microorganism. No problems with the mathematical logic: if an organism is a thousand times more concentrated in the feed than in the treated water the LRV is 3. If it is a million times more concentrated then the LRV is 6, and so on.

This is all fine and dandy, provided the concentration in the permeate is greater than zero. But, for an MBR or other membrane technologies, it very often is zero. Which makes the LRV infinitely large.

The solution to this conundrum is to assume that the microorganism is present at no higher a concentration than the limit of detection (LoD) of the analytical method. But this in itself is problematic. The detectability of the microorganism is largely dependent on the sample size. If the treated water sample volume is increased ten-fold and there is still no microorganism detected, then this means the limit of detection (LoD) is increased by an order of magnitude and the minimum LRV correspondingly increased by one unit. The reverse is true if the sample volume is commensurately reduced.

But if this seems arbitrary, then consider the other part of the ratio – the feedwater concentration. If the feedwater concentration increases by an order of magnitude then the same situation applies: the ratio of the feed to treated water concentration (the latter being set by the LoD) also increases by an order of magnitude and the LRV commensurately increased by one unit.

So what does this mean? Well, put simply, it means the LRV is the ratio of something which varies wildly over something that is set by the sensitivity of the analytical method. Provided the treated water concentration is zero the LRV has very little to do with the performance of the membrane at all. In the campaign on which the article is based no pathogenic microorganisms were ever detected in the MBR permeate – even when larger sample volumes were taken. Given that some microorganisms are generally present in sewage at concentrations 100 to 10,000 times higher than others and may still be undetectable in the treated water, this means that their LRV will be much higher. This doesn’t reflect the relative ease with which they’re removed, merely the limitations of the arithmetic.

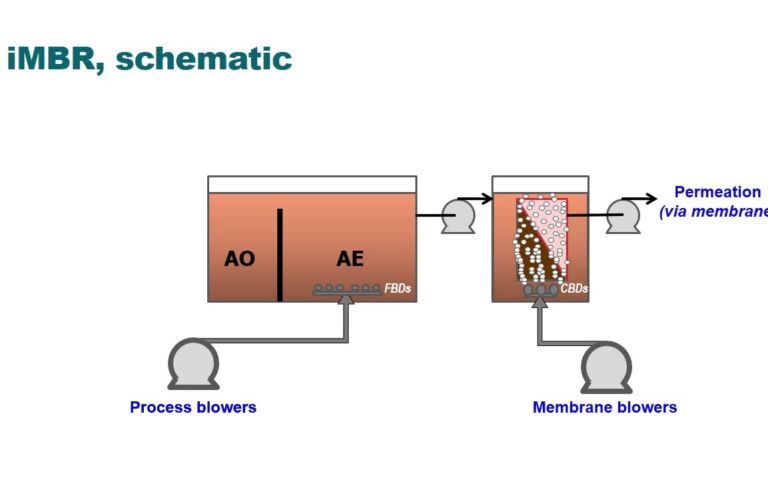

And why does this matter? It matters because the regulator assigns 'credits' – the expected LRV – for individual water/wastewater treatment technologies. The article suggests values of 3.3 and 2.7 for protozoa and viruses respectively as being reasonable for an MBR, based on the results from the study. But these values are inevitably conservative. For an unbreached (i.e. undamaged) membrane, rejection of any microorganism or particle significantly greater than the membrane pore size is absolute. Permeation through the membrane of such entities is only realistically possible if the membrane is breached.

In reality, the disinfection capability of a membrane process relates to the risk of membrane breaching. As is so often the case with vitally important practical aspects of water and wastewater technology, there is not a huge amount of published academic research in this area. However, common sense suggests that the mechanical integrity of the membrane is likely to be challenged by sharp particles – such as shards of metal produced from tapping threads – and manual handling. Also, it would be reasonable to expect the risk of integrity failure to increase with membrane age and, possibly, with excessive and/or aggressive chemical cleaning.

And this then implies that, rather than calculating the LRV from arbitrary parameters such as the level of contamination in the feedwater and the sensitivity of the permeate concentration measurement, it would perhaps be more prudent to try and correlate the risk of membrane integrity failure with relevant system parameters. These might be things such as the mixed liquor coarse solids concentration, the level of exposure to cleaning chemicals and the membrane age.

And, yes, this is probably a pipe dream: there is no prospect of any of the above empirical correlations being generated anytime soon. But at least the numbers would be real, meaningful and, one would hope, less than infinity.